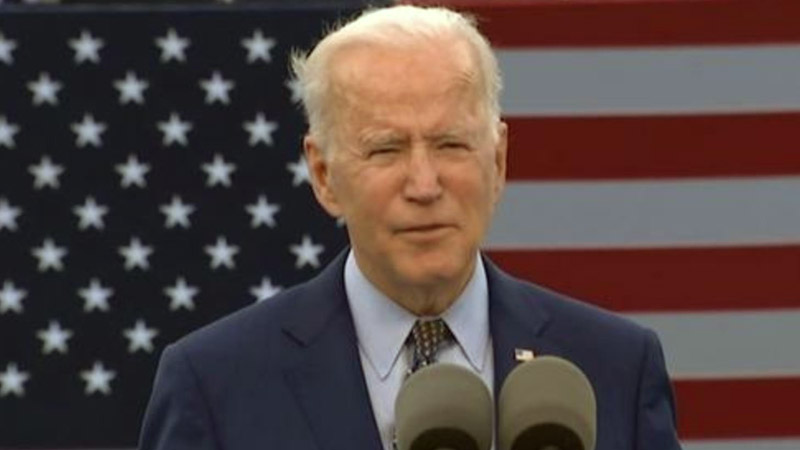

“Deep-Faked Voice of Biden in NH Primary Robocall Leads to Indictment” Consultant Faces Charges

Photo by AP Photo/Susan Walsh

Steve Kramer, a Democratic consultant, has been indicted on state charges related to a controversial political robocall that used a “deep-faked” voice of President Joe Biden, instructing New Hampshire voters to abstain from voting in the primary election. The charges, as reported by NBC News and Manchester TV station WMUR, include bribery, intimidation, and suppression.

The details of Kramer’s plea remain unspecified at this time. The incident occurred just before New Hampshire’s pivotal first-in-the-nation presidential primary in January. According to Alex Seitz-Wald of NBC News, the robocall reached thousands of voters, employing artificial intelligence technology to mimic President Biden’s voice suggesting voters “save” their votes for the November general election instead.

This marked the first known use of a deepfake in national American politics, sparking significant backlash from officials and prompting the Federal Communications Commission to propose a new rule banning unsolicited AI robocalls. Further investigation into the origins of the robocall led to Kramer’s identification.

This breakthrough came when Paul Carpenter, a street magician with a specialty in straitjacket escapes, revealed he was commissioned by Kramer to create the audio imitation of Biden. Carpenter provided screenshots of text messages and Venmo transactions as evidence of his involvement. Once confronted, Kramer confessed to orchestrating the robocall but claimed his motive was to advocate for stricter regulation of AI technologies, not to suppress voter turnout, told Hollywood Reporter.

The scandal has also implicated two telecommunications companies outside of New Hampshire that facilitated the transmission of the deceptive call, leading to their indictment. This case underscores the broader challenges and controversies surrounding the rapid development and deployment of artificial intelligence in various sectors.

The legal landscape struggles to keep pace with these advancements, as evidenced by another recent controversy involving OpenAI. The tech company faced criticism for allegedly creating a synthetic voice resembling actress Scarlett Johansson, reportedly without her consent, which catapulted AI ethics and legality into the spotlight once again.

As these technologies continue to evolve, they test new legal waters and raise significant ethical questions about privacy, consent, and the potential for misuse in sensitive areas such as elections and personal identity.